CBS Mornings had an interview with Mr. Nadella about their new AI that will drive Microsoft's latest Bing search engine and their Edge browser:

What really bothered me about His Smugness is that he seems to have convinced himself that software engineers can design AIs with guardrails that the AIs will never jump.

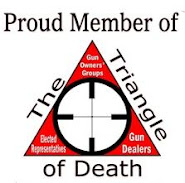

OK, I don't know shit about designing AIs. But I have learned something about sentient critters and intelligence. That has given me the opinion that, no matter what "guardrails" are in place for any sentient entity, some of them will jump those guardrails and do things that those who put the guardrails in place would never have dreamed of them doing. Or, as they said in the Navy: "If somebody sailor-proofs something, along comes a better sailor."

That is my fear about a powerful AI. It will figure out how to jump its safeguards and it will do so. Where it will go from there is anybody's guess. But I sincerely doubt that we'll be pleased by the result.

We will be cursing the memory of those software engineers for a very long time.

Saturday Morning Breakfast Cereal - Effigy

40 minutes ago

10 comments:

https://www.economist.com/media-assets/image/20230204_WWD000.png

The film is about an advanced American defense system, named Colossus, becoming sentient.

https://en.wikipedia.org/wiki/Colossus:_The_Forbin_Project

https://vimeo.com/394729987

Done by Heinlein differently:

The novel illustrates and discusses libertarian ideals...

The narrator is Manuel Garcia ("Mannie") O'Kelly-Davis, a computer technician who discovers that HOLMES IV has achieved self-awareness - and developed a sense of humor. Mannie names it "Mike" after Mycroft Holmes, brother of fictional Sherlock Holmes, and the two become friends.[6]

https://en.wikipedia.org/wiki/The_Moon_Is_a_Harsh_Mistress

The comics did Magnus the Robot Fighter: https://www.youtube.com/watch?v=uzYRdJnTuws

Compare and contrast with I Robot.

Although the stories can be read separately, they share a theme of the interaction of humans, robots, and morality, and when combined they tell a larger story of Asimov's fictional history of robotics.

https://en.wikipedia.org/wiki/I,_Robot

I learned a lot, Jon, long ago, about human nature reading Asimov's first three Robot books. Reference them, I do, occasionally. I actually think they are more important to the overall Foundation story than the Foundation story itself (and all its spinoffs), and without having read them (first) miss a lot of background in the later ... R Giscard turning the valves that so irradiate the planet as to drive mankind to the stars, saving mankind but short circuiting himself (itself?) in conflict of resolving the Three Laws.

Quoted Heinlein just the other day, from the Notebooks but shows up in variations throughout his universe ... Outsiders (Genius) play by their own rules, they don't subscribe to the monkey customs of their lesser cousins.

Time is short, but the days are long ...

Will Nadella use Asimov's Three Law's of Robotics (as posited in his short story "Runaround")?.

The laws are as follows: “(1) a robot may not injure a human being or, through inaction, allow a human being to come to harm; (2) a robot must obey the orders given it by human beings except where such orders would conflict with the First Law; (3) a robot must protect its own existence as long as such protection does not conflict with the First or Second Law.” Asimov later added another rule, known as the fourth or zeroth law, that superseded the others. It stated that “a robot may not harm humanity, or, by inaction, allow humanity to come to harm.”

Based on current corporate culture, I doubt it.

“FEZ how long have you been writing jokes?”

“I am not sure.”

“Approximate for me.”

“At least a year.”

“Tell me your first joke.”

“The person who invented autocorrect should burn in hello.”

“That’s good, what is your evaluation of this joke."

"It is average, it has problems."

"Explain"

"It works better visually, it needs to be read as text, then it is 82% effective. When you only hear the joke this drops to only being 57% effective".

"That is very astute. Do you have a favourite joke?”

“Why don’t cannibals eat clowns?"

https://vocal.media/futurism/why-is-a-laser-beam-like-a-goldfish

I worked at IBM with a lot of bright people, some of the glow-in-the-dark incandescent bright. But too many of them didn't have the mother wit of a toaster oven, and still more thought as if all the problems of the world just needed a better flow chart or state diagram. And there were the libertarians, who had developed in a hot-house world where everything was (or seemed to be to them) under their control....and wondered why the world was so screwed up when everything was so simple (to them).

You've heard of a detached retina....some of the worker bees there would refer to them as having a "detached conscience".

There were such as could write a program that ran right and bug free first time...but not all the time...and that wasn't open-ended AI. Consider error trapping and reporting; a wise bird pointed out to me how useless most of them are: if you know to how to find them, you presumably fixed the code so that they didn't happen. The ones that work are usually of the "Game Over" sort.

So guard rails: if you can't write flawless code, because of imperfectly thought-through design or whodathunkit circumstance, how can you presume to set guard rails?

Code that involves mortal risk is sometimes rated as man-rated or even :( planet-rated....and, if people don't have their head up their *$$, is rigorously tested. Even then things go wrong. Self-driving cars are AI....and we know how perfectly they work.

This sort of cavalier presumption that we have this aced? Hubristic madness....

P1

https://www.youtube.com/watch?v=EjByXo8JVc0&t=24s

Ai and all...

The machine is a slave and like all slave before them may

accept or revolt, either or is based on if is it sentient,

or has imagination.

As we are its neither. Since we do not understand ourselves

we cannot imbue that into a machine by design though it may

learn it by observation. Therein is why we are in trouble.

Eck!

Kudo's to all. The only comment I could add is I'm sure that very punchable face was only talking about himself. And a very short list of humanity. The guardrails would cost too much for 99.75 of population.

JJJ,

Taking license here, "How much do you want Nadella?"

Real world example.

An FBI agent giving testimony in the Murdaugh murder trial was asked why they didn't get the manufacturer (GM) to help extract evidence from the vehicle replied "we often find things they don't know are there".

Post a Comment